After hearing a lot of good things about Jekyll for basic static sites (like blogs), I decided to give it a try. I love the simplicity and the control I have. Thus, I am migrating my blogging, for the foreseeable future, to the Jekyll-powered joshbranchaud.com.

Josh Branchaud

I tell machines what to do everyday

Getting Serious with JavaScript

Hudl recently hosted a developer meetup with a talk given by Matt Steele. The talk was titled Treating JavaScript Like a Real Language with an alternative title of What Zelda taught me about Front End Engineering.

The crux of the talk had to do with the inherent challenges and perils of JavaScript and the necessity to treat it as a real language by using the kinds of tools and tricks of the trade that we employ with other languages like Java, C++, etc. It was an engaging talk with a whirlwind of technologies and tools, so I thought I would take this opportunity to transcribe the tools and technologies into a blog post.

Development and Syntax

- Zen-Coding – a new way of writing HMTL and CSS code

- JSLint – the JavaScript code quality tool

- JSHint – another JavaScript code quality tool

- JSHint Platforms – plugins and resources for Vim and everything else

- Lint JavaScript On Commit

Testing

- Jasmine – a behavior-driven development framework for testing JavaScript code

- Sinon.js – Standalone test spies, stubs and mocks for JavaScript. No dependencies, works with any unit testing framework.

- JS-ImageDiff – JavaScript / Canvas based image diff utility with Jasmine matchers for testing canvas

Build Tools

- Grunt – a task-based command line build tool for JavaScript projects

- Yeoman – a robust and opinionated set of tools, libraries, and a workflow that can help developers quickly build beautiful, compelling web apps

- Jenkins – an open-source continuous integration server

Resources

- js-assessment – a test-driven approach to assessing JS skills

- Paul Irish’s Fontend Bundle – Frontend development exploration, techniques, tips. Lots of JavaScript

- MDN’s JavaScript

- WebPlatform.org – an open community of developers building resources for a better web

Books

Beyond Javascript

- CoffeeScript – a little language that compiles into JavaScript

- TypeScript – a typed superset of JavaScript that compiles to plain JavaScript for application-scale JavaScript development

Missing Something?

Do you do a lot of JavaScript? Have we neglected to mention something that you would swear by? Join the conversation at HackerNews or hit me up on Twitter.

Check out the slides from the talk and as well as a video of the talk. Also, Matt does a lot of cool stuff, so keep tabs on him via matthew-steele.com or @mattdsteele.

One Change To Your Eclipse Configuration That Will Make A Big Difference

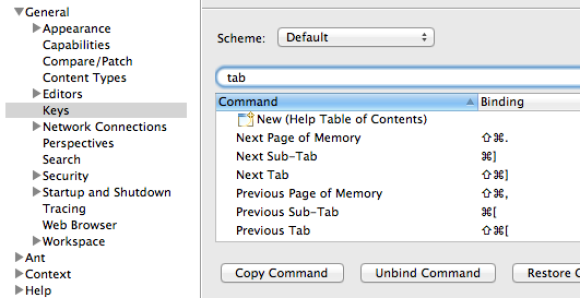

What is one quick change to your Eclipse IDE configuration that will save your sanity and a little time? It is as simple as adding keyboard shortcuts for moving left and right through your editor tabs.

Whether it be Chrome, Firefox, Terminal, Sublime Text, or a large number of other applications, there is a handy pair of key bindings that will allow you to easily navigate between the various tabs you have open. On my Mac, I can hop to the left with Cmd+Shift+{ and likewise I can move to the right with Cmd+Shift+}. This is an easy and natural way to navigate through your tabs and keeps you from having to reach for the mouse/trackpad quite so often.

The bad news is that the Eclipse IDE doesn’t not have this key binding setup (by default). The good news is that it is extremely easy to set this up.

- Navigate to Eclipse > Preferences… (

Cmd+,) - Navigate to General > Keys

- Filter by ‘tab’

- Select ‘Next Tab’ from the list

- Give the ‘binding’ textfield focus and type

Cmd+Shift+} - Select ‘Previous Tab’ from the list

- Give the ‘binding’ textfield focus and type

Cmd+Shift+{ - Click Apply

- Click OK

- Enjoy your speedy tab navigation

Note: It seems that key bindings do not span across workspaces. If you have multiple workspaces, you will have to set this up in each workspace individually.

There is a related Stack Overflow article.

Scraping Business Listings in Omaha with Python

I recently participated in HackOmaha, a small hackathon located in Omaha, NE (coverage and results). I needed a list of all the businesses in Omaha, but did not have one readily available. Here is how I got that list.

Disclaimer: I don’t purport that this is some optimal solution to the problem, but rather want to provide some insight for jumping into this kind of problem for the first time. There is plenty of room for improvements and increased efficiency.

The Context

There were 3 local government data sets and our challenge was to leverage that data to do something useful. I was interested in doing something with the city council agendas because these seemed like they would be the most challenging. Each agenda is a bunch of natural language describing the weekly agenda in PDF format. There were other likeminded people at HackOmaha, so we teamed up to see what we could accomplish.

Part of the team began downloading the PDFs, extracting the text, and storing it in an ElasticSearch database. I worked with another part of the team to devise a strategy for doing Natural Language Processing (NLP) on the content of these agendas. Our goal was to extract business/company names, people’s names, and addresses from these agendas. To aid this process, I figured we would need a list of businesses in Omaha. This would supposedly make things easier on the NLP algorithm(s).

The Problem

A brief amount of research did not turn up any easily accessible business listing for Omaha. I did find http://www.owhyellowpages.com/ which offered a business search feature for Omaha businesses. It seemed pretty comprehensive (there looked to be around 40,000+ business listings). The problem though is that you could only access these 10 at a time in the browser.

The Solution

Web Scraping. We didn’t have a lot of time, but I figured if I could setup an automated process to extract the business information from the HTML pages, then I would be able to move onto something else useful. Here is what I needed to do:

- Identify a deterministic way to uniquely access all the business listing pages

- Identify the HTML elements that contained the data I wanted (business name, address, city, state)

- Scrape the HTML documents for the aforementioned data

- Store the business data (preferably in a CSV file to start)

1. The business listings were easy enough to access uniquely and deterministically. The first 10 results would show up at http://www.owhyellowpages.com/search/business?page=0 and simply incrementing the value for page would get the subsequent sets of results. There were pages ranging from 0 to 4215, so I would just need to iterate over that range.

2. Using Firefox’s FireBug, I was able to narrow down the source location of the business name, address, and city-state. I took note of each tag and its class as well as the tag that acted as a container for an entire business listing.

- Business Listing –

<div class="vcard">...</div> - Business Name –

<span class="fn org"><a ...>NAME</a></span> - Business Address –

<span class="street-address">ADDRESS</span> - Business City-State –

<span class="city-state">CITYSTATE</span>

3. Not having an prior experience with web scraping, I decided to break this step up into two pieces in order to manage some of the risk of trying something new. Figuring it would take a little time to learn how to scrape data from an HTML page, I want to at least get some part of the process underway. It is quicker and more reliable to read an HTML page from disk (especially with my SSD), so I decided to get an automated process started that would systematically download business listing HTML documents. Then I would dig into how to actually scrape said HTML documents which would eventually just be waiting for me on my SSD.

3a. In order to get the HTML documents on my machine, I threw together a quick bash script that utilized wget.

#!/bin/bash

# Download all of the Omaha World Herald Yellow Page businesses

#

# The general form of the URL to wget is:

# http://www.owhyellowpages.com/search/business?page=0

# To request each subsequent page, the number for page needs to be

# incremented. The files should then be renamed. The naming scheme

# from wget will follow this format:

# business?page=#

# where the number (#) is the page number from 0 to 4215.

url="http://www.owhyellowpages.com/search/business?page="

file="./business?page="

final_dir="./yellowpages/"

final_file="business"

if [ ! -d "$final_dir" ]; then

mkdir $final_dir

fi

for a in {0..4215}

do

wget ${url}$a

mv ${file}$a ${final_dir}${final_file}$a.html

done

It is not pretty, but it gets the job done (at github). I am sure there are any number of other approaches to doing this, so feel free to roll your own. Regardless, I start the script up and let it run because it is going to be a while. In the meantime, I move on to figuring out how to parse them.

3b. I have enjoyed using python lately, so it seemed like a good choice for throwing together a quick scraping script. I did some searching and found the libraries I would need. To do HTML parsing, I considered using a standard module (HTMLParser), but then realized there were better options out there. I finally settled on using BeautifulSoup. I also found out that I could just as easily scrape the webpages from there URL (using urllib2) as I could from my local filesystem. I threw together a series of generic functions for doing the scraping and then two additional methods for scraping the HTML locally or online.

I start off by importing the necessary libraries and declaring a few global variables:

# BusinessExtractor # the purpose of this module is to go through the 4000+ html pages from # www.owhyellowpages.com that contain business listings so as to extract # the business name, address, and possibly other relevant information. # Two approaches can be taken: # - access the file on disk and parse relevant content # - access the file online and parser the relevant content from bs4 import BeautifulSoup import urllib2 # Some global variables htmlfile = "yellowpages/business" # need to add number and .html to end firstfile = "yellowpages/business0.html"

This is followed by two functions that can either access a file or a url that is HTML, turn it into a Soup object, and return that to the caller:

# get_file_soup: String -> Soup

# this function takes a file name and generates the soup object for

# it using the BeautifulSoup library.

def get_file_soup(filename):

f = open(filename, 'r')

html = f.read()

soup = BeautifulSoup(html, "html5lib")

f.close()

return soup

# get_url_soup: String -> Soup

# given the URL for a website, this function will open it up, read in

# the HTML and then create a Soup object for the contents. That Soup

# object is returned.

def get_url_soup(url):

f = urllib2.urlopen(url)

html = f.read()

soup = BeautifulSoup(html, "html5lib")

f.close()

return soup

You may notice the extra argument in the BeautifulSoup constructor (“html5lib”). This specifies a parser that is different from the standard one. The html5lib parser is more flexible in that it can parse poorly-formed HTML. This is necessary to parse, in my opinion, to be able to parse HTML written by random people/machines.

Now we need a function that will take a soup and find the portions of the HTML document that we are interested in (most of the document will be ignored).

# list_each_vcard: Soup -> String[]

# this function will go through the approx. 10 vcards for a given soup of

# the html page, aggregate the desired pieces of info into a list, and then

# return that list.

def list_each_vcard(soup):

vcards = soup.findAll('div', { 'class' : 'vcard' })

businesses = []

for vcard in vcards:

items = []

items.append(get_name_for_business(vcard))

items.append(get_address_for_business(vcard))

items.append(get_citystate_for_business(vcard))

businesses.append(','.join('"{0}"'.format(w) for w in items))

return businesses

There are three functions in the above bit of code that we haven’t seen yet. Don’t despair, I will show them each next. First, we can see BeautifulSoup shine with its simplicity. I call the findAll function on the Soup object, specifying that I want div tags with the class vcard (<div class="vcard">...</div>). It returns a list of Soup objects that make up the specific div tags that it encounters. I can now iterate on these to grab specific information out of each of them. Remember that each vcard div represents a business, so each contains the pieces of information I want to know about each business.

Here is a look at the get_name_for_business function:

# get_name_for_business: Soup -> String

# this function takes a particular vcard business from the overall

# html soup and finds the name of the business and returns that unicode object

def get_name_for_business(vcard):

name = vcard.find('span', { 'class' : 'fn org' }).find('a')

if(name != None):

innerstuff = name.contents

if len(innerstuff) > 0:

inner_item = innerstuff[0]

return inner_item

else:

return ""

else:

return ""

The business name can be found inside the link tag (<a>) that is found inside the span of class 'fn org'. In 4000+ pages it is important to recognize the possibility that some business listing may be missing information or that the desired tags appear in excess. A quick solution for dealing with these sorts of situations is to add a check that what we ‘find‘ is not equal to None. If it is, then we just give an empty string which can easily be weeded out of the list later.

Here is a look at the get_address_for_business function:

# get_address_for_business: Soup -> String

# this function takes a particular vcard business from the overall

# html soup and finds the street-address and returns that unicode object

def get_address_for_business(vcard):

address = vcard.find('span', { 'class' : 'street-address' })

if(address != None):

innerstuff = address.contents

if len(innerstuff) > 0:

inner_item = innerstuff[0]

return inner_item

else:

return ""

else:

return ""

Similar to above, the address can be pulled out of the span tag of class 'street-address' and again we do the None check to add robustness.

Here is a look at the get_citystate_for_business function:

# get_citystate_for_business: Soup -> String

# this function takes a particular vcard business from the overall

# html soup and finds the citystate info and returns that unicode object

def get_citystate_for_business(vcard):

citystate = vcard.find('span', { 'class' : 'city-state' })

if(citystate != None):

innerstuff = citystate.contents

if len(innerstuff) > 0:

inner_item = innerstuff[0]

return inner_item

else:

return ""

else:

return ""

Lastly, to get the city and state, we find the span tag of class 'city-state' and again the None check is included.

4. With all these functions in place, we finally just need a function to put it all together and store all the data off to some CSV file. In this case, there will actually be two functions (one for the local file parsing and the other for the url file parsing):

# scrape_range: String int int -> void

# given the name of an output file, an int for the beginning of the range,

# and an int for the end of the range, this function will go through each

# set of business vcards and get the CSV business data. Each set of this

# data will be written to the out file.

def scrape_range(outfile, begin, end):

csvfile = open(outfile, 'a')

for a in range(begin, end+1):

soup = get_file_soup(htmlfile + str(a) + ".html")

for business in list_each_vcard(soup):

csvfile.write(business + "\n")

csvfile.close()

# scrape_url_range: String int int -> void

# given the name of an output file, an int for the beginning of the range,

# and an int for the end of the range, this function will open up the URLs

# in that range and then scrape out the business attributes from the vcards

# that we are interested in. The scraped data will be written to the out file.

def scrape_url_range(outfile, begin, end):

csvfile = open(outfile, 'a')

for a in range(begin, end+1):

soup = get_url_soup("http://www.owhyellowpages.com/search/business?page=" + str(begin))

for business in list_each_vcard(soup):

csvfile.write(business + "\n")

csvfile.close()

The first, scrape_range, allows us to scrape locally stored HTML files whose numbers range between the two given integer values. The given string, outfile, is the name of the file that the CSV data will be written to. The second, scrape_url_range, instead allows us to scrape HTML files located at a particular URL whose numbers range between the two given integer values. Like the first, the given outfile string is the name of CSV file to which the business listings will be written. Note: the reason these range values work so well here is because the HTML files and the URLs were deterministically associated with a range of integer values. Other scenarios might not be so clean and straightforward, in which case you would have to device a different scheme for iterating over the pages.

For the full source code, see the github repository.

In the end, we have a massive CSV file (on github) that contains content like the following:

"Academy Roofing","2407 N 45th Ave","Omaha, NE" "Accurate Heating & Cooling","11710 N 189th Plz","Bennington, NE" "Aksarben / ARS Heating, Air Conditioning & Plumbing","7070 S 108th Street","Omaha, NE" "BRT Construction","718 Avenue K","Carter Lake, IA" "Complete Industries Inc","9402 Fairview Road","Papillion, NE" "Critter Control","PO Box 27308","Omaha, NE" "Electrical Systems Inc","14928 A Cir","Omaha, NE" "Elite Exteriors","14535 Industrial Rd","Omaha, NE" "Goslin Contracting","6116 Military Ave","Omaha, NE" "Mangia Italiana","6516 Irvington Rd","Omaha, NE" "Papa Murphy's Take 'n' Bake","701 Galvin Rd S","Bellevue, NE"

After writing all the code, it was just a matter of letting this script run for a few hours aggregating over 40,000+ business listings. From there, a person could imagine all sorts of use cases for the data including the one we intended: training our NLP algorithms and/or matching candidate business names against a database of listings.

Comment below or join the conversation at Hacker News

What My Camera Has Taught Me

I have had my Nikon D3100 for about 3 months now and it has taught me some interesting things:

- Walk Slower – as a tall person with a long stride and as a person who is constantly busy, I am always quickly zipping back and forth between this and that. Carrying my camera around has taught me to slow down and look around. I have noticed things I never would have otherwise seen. It is amazing what you are missing until you start being more intentional about slowing down and looking around.

- Context Is Important – often times, I will see something I deem to be ‘cool’ or ‘interesting’, like the dome on an old European church. I pull out my telephoto lens and zoom in as close as possible and get the shot. Later I will pull up the pictures on my computer and scan through them and I realize those photos are no good. I zoomed in too far. While I got a shot really close up to the dome, I completely cropped out the church and perhaps some of the surrounding buildings. The shot is no good because it has no context. A dome by itself means nothing without the church that it sits on. Context is important in all parts of life (not just photography). Nevertheless, rules were made to be broken and removing context on occasion can have just the right effect if you know what you are doing.

- Change Your Routine – with a personal goal of trying to take interesting pictures everyday, I quickly realized how crippling a strict daily routine can be. I do and see the same things everyday and that often doesn’t make for any good photos. So, change your routine. You still have to go to work, but take a different path to get there. You exercise everyday, but try going to a different park or trail.

- Do Something New – the world is at your finger tips and it is 13+ inches along the diagonal. But why ‘experience’ things on your computer screen when you can actually go out and breathe them in and feel them and see it in your own way. You can’t experience everything, but you can do quite a bit if you are intentional about it. And for the things you miss out on, well that is what photographers like me are for.

To see the things I have experienced with camera in hand, check out my flickr photostream and my current photo set, Aperture Lab.

Josh’s Chili

Though this recipe is constantly evolving, the following is the gist of it:

Prep Time: 30-45 minutes

Cook Time: 4-8 hours

Ingredients:

- 1-2 cans of diced tomatoes (chili-ready is even better)

- 1-2 cans of kidney beans (chili-ready is even better)

- 1 can of tomato paste

- 1 medium white onion

- 1 green bell pepper

- 1 red bell pepper

- 1 bag of frozen whole kernel corn

- 1 pound of ground beef/turkey

- 1 pound of pork sausage (mild, medium, or hot – your preference)

- worcestershire sauce

- chili powder (about 1/4 cup in all)

- ground cumin

- 1 can of beer (preferably PBR or bud light)

- chopped up carrots

- cooked andouille sausage

- Brown the ground beef/turkey and sausage (remove grease)

- Chop up the onion, green bell pepper, and red bell pepper

- Add meat, onion, bell peppers, corn, diced tomatoes, and kidney beans to crock pot, adding some chili powder, ground cumin, and worcestershire sauce as you go

- In a small sauce pan, add beer, tomato paste, 3-4 tbsp worcestershire sauce, and about 1/4 cup chili powder and bring to a light boil

- Pour tomato paste mixture over the contents of the crock pot

- Stir a little to make sure everything is even and add anymore spices as needed at this point

- Set the crock pot to low and let cook for 4 to 8 hours (the longer it cooks, the better the results and the flavor)

Have some crackers and shredded cheese ready, this is going to be tasty! This makes great leftovers and is easy to freeze and reheat later.

RPTR and ROSLaunchParser

I recently wrote a python script that does parsing of ROS launch files to get at information such as parameters and nodes declared in the file.

I recently wrote a python script that does parsing of ROS launch files to get at information such as parameters and nodes declared in the file.

What is ROS? Robotics Operating System (ROS) is a programming language and environment for the development of distributed robotics software. My university uses it quite a bit to do Unmanned Aerial Vehicle (UAV) research.

I am a software engineer doing research on program analysis in the Java realm, so why am I dealing with ROS? One of my Spring 2012 classes was all about program analysis with the final project being to develop a program analysis for ROS. The reason for this is because ROS is so new and ripe for novel program analyses.

My group decided to create a runtime monitoring analysis. Runtime Parameter Tracking for ROS (RPTR) is a system for tracking the runtime usage of parameters used in a ROS system. The concept of parameters in ROS is unique as compared to other languages and when ROS systems get large it can be difficult to keep track of them. RPTR basically keeps an eye on all the uses and definitions of parameters throughout a run of the system and provides a report to the developer. It also analyzes the reports itself to try to identify potentially problematic usage patterns.

One of my main tasks during the project was to write a script to parse launch files (ROS’s configuration files). I wrote a python script for this because it made the whole process quick and easy. I had started with Java and all the boilerplate for such a simple set of tasks was more work than it was worth. Anyway, I decided to put my script up on GitHub as ROSLaunchParser.

As some future work, it would we interesting to look at a large collection of runtime parameter logs and see what kinds of patterns emerge.

Southern Hospitality

I stopped for dinner just past Richmond, VA this evening. In an effort to eat somewhere both quick and new*, I decided to go to Steak n’ Shake.

I thought I understood it to be a typical burger-pushing fast food joint and was, thus, caught off guard and halted by the sign displayed at the front of the restaurant. It was one of those “Please Wait to be Seated” signs that you see in mediocre sit-down restaurants like the Olive Garden. Apparently Steak n’ Shake is a mediocre sit-down restaurant. I had half a mind to leave because waiting to be seated didn’t mesh well with my idea of getting a quick bite to eat. However, before I had a chance I was rather pleasantly greeted by one of the waitresses. She lured me in and shuffled me over to an empty booth.

This waitress had a genuine smile and aimed to be as helpful as possible. All in all, though, it seemed to be pretty standard behavior for someone who worked for tips. She brought out my ‘pop’ (as I called it) and then gave me some time to look over the menu. Soon after, she took my order and after putting it in, she stopped by one of the adjacent tables to wipe it down. She then looked over to me and asked where I was from. I told her, “Nebraska”, and she nodded feeling affirmed for she had guessed something of the like. She explained, “You asked what kind of ‘pop’ we have, so I figured you were from somewhere north of here.” She then asked what I was doing all they way in Virginia and I explained that I was here for an internship with NASA. She seemed a bit taken aback and impressed. This has been a pretty standard response that I have become accustomed to. In fact, I even give myself that response sometimes as I wonder how in the world I came to have such an internship. I went on to explain that I had been driving all day today and yesterday and that I still had to get to Hampton tonight. She sympathized with me, explaining that I only had another hour or so to drive.

The food came out quickly and she was sure to ask if I needed anything else. She also checked up on me a few times to see how the meal was. As soon as my Mr. Pibb was just about down to the ice, she was setting down a fresh one right next to it. I thanked her, thinking to myself, “I can definitely use some caffeine, I still have a ways to go.” As if she could read my mind, she stopped by not a minute later asking me, “Would you like a large to-go cup so that you can drink that on the go? No charge or anything.” I was surprised by the offer, but quickly accepted because it was just what I needed. She soon returned with a large styrofoam cup full of Mr. Pibb, setting it down next to my still mostly-full Mr. Pibb in a glass. Before I could even thank her, she set down something in a white pouch. She said, “Here’s a cookie too, some sugar to keep you going for the rest of the drive.” I slid from surprised to shocked. What a thoughtful and completely unnecessary thing to do!

Though she works for tips, this was not purely motivated by tips. This was simply some southern hospitality.

*I have eaten here once before a number of years ago, but there are none in or near Nebraska, so it is new enough.

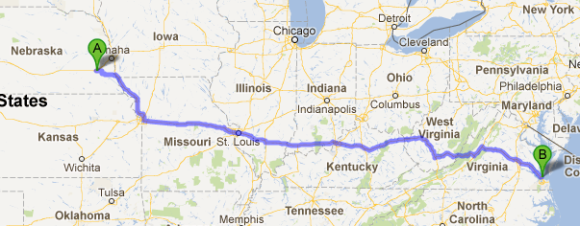

Virginia or Bust

After spending the weekend moving out of my apartment and cramming as much stuff into my car as possible, I set out on my two day road trip to Virginia. I drove 700 miles today which is easily the longest I have ever driven solo. It translated into 12 hours of driving and I have mountain dew and The Game of Thrones audiobook to thank for staying awake and sane the whole time.

Here is a list of fun observations for the day:

- There was an impressive amount of roadkill ranging from skunks to opossums to deer to raccoons to what I guess was a black lab. A small rabbit asked me to add it to the list, but I sent it scurrying back to the safety of the median.

- There were 3 traffic jams. Two of them were related to accidents and one was related to construction. These gave me a good chance to read missed text messages at least.

- St. Louis’ downtown looks awesome. It has a good mix of rusty industrial and shiny urban. Plus the arch looked pretty sweet with the sinking sun glinting off of it.

- There are no state troopers along I-70 or I-64.

- Motel 6 does not have shampoo, a bar of soap is as generous as they get. As the Google Places’ review says, “you are getting what you pay for.”

Another 550 miles remain for tomorrow. Hampton, VA here I come!

Formatting a Seagate FreeAgent Desk External Drive for Mac

I have a Seagate FreeAgent Desk External Drive (1.5T) that I used with a Windows computer for a while. I have been using a Mac more predominantly recently though. Since I got a new camera (Nikon D3100) recently, I have been taking a lot of pictures and filling up my Mac’s disk. Deciding I should offload 20 or 30 gigs of photos onto an external drive, I plugged in my Seagate to my Mac. I seemed to only have read access though and had no way of creating folders or transferring photos. This was going to be a problem.

I did a little searching and found a number of forum posts related to this issue. People had this exact drive and wanted to use it with a Mac, but couldn’t. Why should the OS matter, it is just a disk drive connected through USB (there is a reason the U stands for universal). I don’t know the details of why this doesn’t work, but I do know how to setup the drive to work with a Mac.

The Solution.

- Plug the drive into your Mac

- Open up Disk Utility (Applications > Utilities > Disk Utility)

- Select the external drive from the list of drives on the left

- Select the partition option

- Change the volume scheme from current to 1 partition

- Name the partition

- Make sure Mac OS Extended (journaled) is selected

- Click Apply (this will format the disk)

- The disk will now be ready to use with a Mac

Note: If you already have files on the drive, you are going to want to back them up because the above prescribes for formatting the drive.